Optimizing Serial Code

Chris Rackauckas

September 3rd, 2019

Youtube Video Link Part 1

Youtube Video Link Part 2

At the center of any fast parallel code is a fast serial code. Parallelism is made to be a performance multiplier, so if you start from a bad position it won't ever get much better. Thus the first thing that we need to do is understand what makes code slow and how to avoid the pitfalls. This discussion of serial code optimization will also directly motivate why we will be using Julia throughout this course.

Mental Model of a Memory

To start optimizing code you need a good mental model of a computer.

High Level View

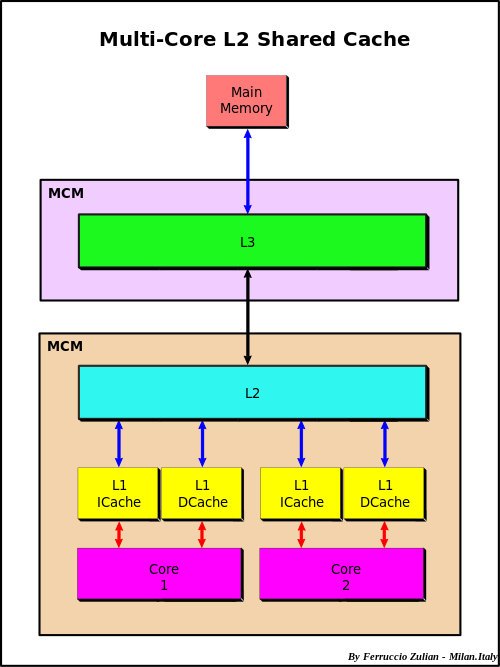

At the highest level you have a CPU's core memory which directly accesses a L1 cache. The L1 cache has the fastest access, so things which will be needed soon are kept there. However, it is filled from the L2 cache, which itself is filled from the L3 cache, which is filled from the main memory. This bring us to the first idea in optimizing code: using things that are already in a closer cache can help the code run faster because it doesn't have to be queried for and moved up this chain.

When something needs to be pulled directly from main memory this is known as a cache miss. To understand the cost of a cache miss vs standard calculations, take a look at this classic chart.

(Cache-aware and cache-oblivious algorithms are methods which change their indexing structure to optimize their use of the cache lines. We will return to this when talking about performance of linear algebra.)

Cache Lines and Row/Column-Major

Many algorithms in numerical linear algebra are designed to minimize cache misses. Because of this chain, many modern CPUs try to guess what you will want next in your cache. When dealing with arrays, it will speculate ahead and grab what is known as a cache line: the next chunk in the array. Thus, your algorithms will be faster if you iterate along the values that it is grabbing.

The values that it grabs are the next values in the contiguous order of the stored array. There are two common conventions: row major and column major. Row major means that the linear array of memory is formed by stacking the rows one after another, while column major puts the column vectors one after another.

Julia, MATLAB, and Fortran are column major. Python's numpy is row-major.

A = rand(100,100) B = rand(100,100) C = rand(100,100) using BenchmarkTools function inner_rows!(C,A,B) for i in 1:100, j in 1:100 C[i,j] = A[i,j] + B[i,j] end end @btime inner_rows!(C,A,B)

8.836 μs (0 allocations: 0 bytes)

function inner_cols!(C,A,B) for j in 1:100, i in 1:100 C[i,j] = A[i,j] + B[i,j] end end @btime inner_cols!(C,A,B)

3.158 μs (0 allocations: 0 bytes)

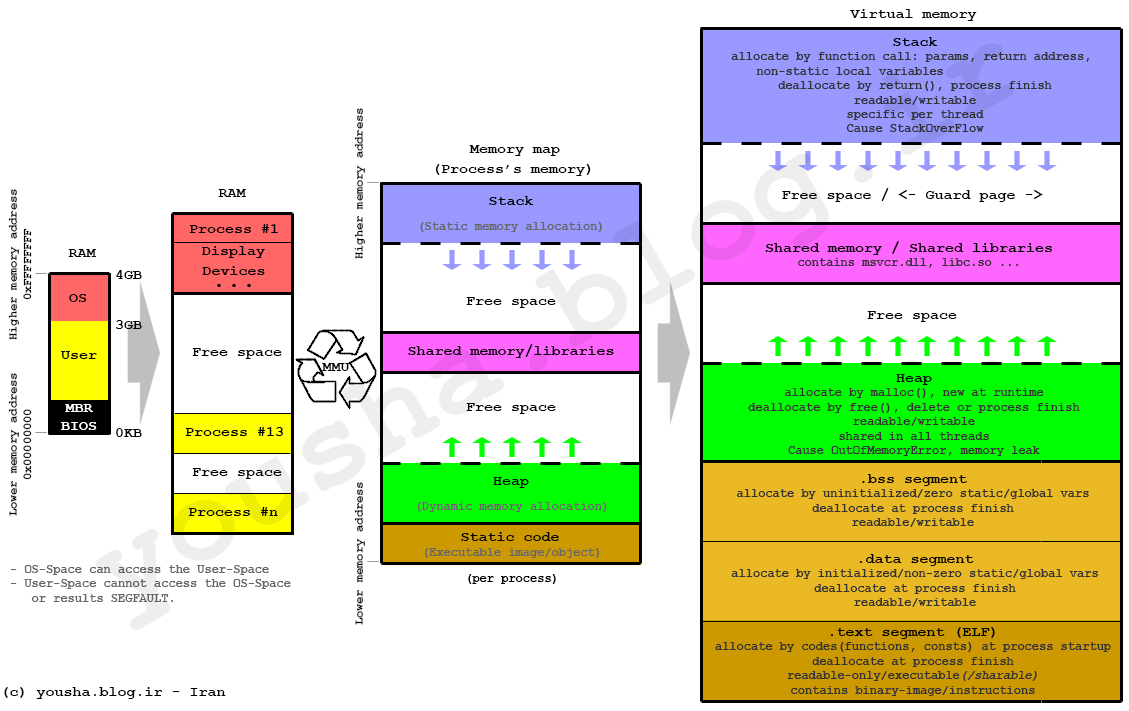

Lower Level View: The Stack and the Heap

Locally, the stack is composed of a stack and a heap. The stack requires a static allocation: it is ordered. Because it's ordered, it is very clear where things are in the stack, and therefore accesses are very quick (think instantaneous). However, because this is static, it requires that the size of the variables is known at compile time (to determine all of the variable locations). Since that is not possible with all variables, there exists the heap. The heap is essentially a stack of pointers to objects in memory. When heap variables are needed, their values are pulled up the cache chain and accessed.

Heap Allocations and Speed

Heap allocations are costly because they involve this pointer indirection, so stack allocation should be done when sensible (it's not helpful for really large arrays, but for small values like scalars it's essential!)

function inner_alloc!(C,A,B) for j in 1:100, i in 1:100 val = [A[i,j] + B[i,j]] C[i,j] = val[1] end end @btime inner_alloc!(C,A,B)

116.898 μs (10000 allocations: 312.50 KiB)

function inner_noalloc!(C,A,B) for j in 1:100, i in 1:100 val = A[i,j] + B[i,j] C[i,j] = val[1] end end @btime inner_noalloc!(C,A,B)

3.196 μs (0 allocations: 0 bytes)

Why does the array here get heap-allocated? It isn't able to prove/guarantee at compile-time that the array's size will always be a given value, and thus it allocates it to the heap. @btime tells us this allocation occurred and shows us the total heap memory that was taken. Meanwhile, the size of a Float64 number is known at compile-time (64-bits), and so this is stored onto the stack and given a specific location that the compiler will be able to directly address.

Note that one can use the StaticArrays.jl library to get statically-sized arrays and thus arrays which are stack-allocated:

using StaticArrays function static_inner_alloc!(C,A,B) for j in 1:100, i in 1:100 val = @SVector [A[i,j] + B[i,j]] C[i,j] = val[1] end end @btime static_inner_alloc!(C,A,B)

3.151 μs (0 allocations: 0 bytes)

Mutation to Avoid Heap Allocations

Many times you do need to write into an array, so how can you write into an array without performing a heap allocation? The answer is mutation. Mutation is changing the values of an already existing array. In that case, no free memory has to be found to put the array (and no memory has to be freed by the garbage collector).

In Julia, functions which mutate the first value are conventionally noted by a !. See the difference between these two equivalent functions:

function inner_noalloc!(C,A,B) for j in 1:100, i in 1:100 val = A[i,j] + B[i,j] C[i,j] = val[1] end end @btime inner_noalloc!(C,A,B)

3.157 μs (0 allocations: 0 bytes)

function inner_alloc(A,B) C = similar(A) for j in 1:100, i in 1:100 val = A[i,j] + B[i,j] C[i,j] = val[1] end end @btime inner_alloc(A,B)

3.469 μs (3 allocations: 78.21 KiB)

To use this algorithm effectively, the ! algorithm assumes that the caller already has allocated the output array to put as the output argument. If that is not true, then one would need to manually allocate. The goal of that interface is to give the caller control over the allocations to allow them to manually reduce the total number of heap allocations and thus increase the speed.

Julia's Broadcasting Mechanism

Wouldn't it be nice to not have to write the loop there? In many high level languages this is simply called vectorization. In Julia, we will call it array vectorization to distinguish it from the SIMD vectorization which is common in lower level languages like C, Fortran, and Julia.

In Julia, if you use . on an operator it will transform it to the broadcasted form. Broadcast is lazy: it will build up an entire .'d expression and then call broadcast! on composed expression. This is customizable and documented in detail. However, to a first approximation we can think of the broadcast mechanism as a mechanism for building fused expressions. For example, the Julia code:

A .+ B .+ C;

under the hood lowers to something like:

map((a,b,c)->a+b+c,A,B,C);

where map is a function that just loops over the values element-wise.

Take a quick second to think about why loop fusion may be an optimization.

This about what would happen if you did not fuse the operations. We can write that out as:

tmp = A .+ B tmp .+ C;

Notice that if we did not fuse the expressions, we would need some place to put the result of A .+ B, and that would have to be an array, which means it would cause a heap allocation. Thus broadcast fusion eliminates the temporary variable (colloquially called just a temporary).

function unfused(A,B,C) tmp = A .+ B tmp .+ C end @btime unfused(A,B,C);

6.059 μs (6 allocations: 156.42 KiB)

fused(A,B,C) = A .+ B .+ C @btime fused(A,B,C);

3.907 μs (3 allocations: 78.21 KiB)

Note that we can also fuse the output by using .=. This is essentially the vectorized version of a ! function:

D = similar(A) fused!(D,A,B,C) = (D .= A .+ B .+ C) @btime fused!(D,A,B,C);

3.443 μs (0 allocations: 0 bytes)

Note on Broadcasting Function Calls

Julia allows for broadcasting the call () operator as well. .() will call the function element-wise on all arguments, so sin.(A) will be the elementwise sine function. This will fuse Julia like the other operators.

Note on Vectorization and Speed

In articles on MATLAB, Python, R, etc., this is where you will be told to vectorize your code. Notice from above that this isn't a performance difference between writing loops and using vectorized broadcasts. This is not abnormal! The reason why you are told to vectorize code in these other languages is because they have a high per-operation overhead (which will be discussed further down). This means that every call, like +, is costly in these languages. To get around this issue and make the language usable, someone wrote and compiled the loop for the C/Fortran function that does the broadcasted form (see numpy's Github repo). Thus A .+ B's MATLAB/Python/R equivalents are calling a single C function to generally avoid the cost of function calls and thus are faster.

But this is not an intrinsic property of vectorization. Vectorization isn't "fast" in these languages, it's just close to the correct speed. The reason vectorization is recommended is because looping is slow in these languages. Because looping isn't slow in Julia (or C, C++, Fortran, etc.), loops and vectorization generally have the same speed. So use the one that works best for your code without a care about performance.

(As a small side effect, these high level languages tend to allocate a lot of temporary variables since the individual C kernels are written for specific numbers of inputs and thus don't naturally fuse. Julia's broadcast mechanism is just generating and JIT compiling Julia functions on the fly, and thus it can accommodate the combinatorial explosion in the amount of choices just by only compiling the combinations that are necessary for a specific code)

Heap Allocations from Slicing

It's important to note that slices in Julia produce copies instead of views. Thus for example:

A[50,50]

0.8838163777583874

allocates a new output. This is for safety, since if it pointed to the same array then writing to it would change the original array. We can demonstrate this by asking for a view instead of a copy.

@show A[1] E = @view A[1:5,1:5] E[1] = 2.0 @show A[1]

A[1] = 0.9096448288783205 A[1] = 2.0 2.0

However, this means that @view A[1:5,1:5] did not allocate an array (it does allocate a pointer if the escape analysis is unable to prove that it can be elided. This means that in small loops there will be no allocation, while if the view is returned from a function for example it will allocate the pointer, ~80 bytes, but not the memory of the array. This means that it is O(1) in cost but with a relatively small constant).

Asymptotic Cost of Heap Allocations

Heap allocations have to locate and prepare a space in RAM that is proportional to the amount of memory that is calculated, which means that the cost of a heap allocation for an array is O(n), with a large constant. As RAM begins to fill up, this cost dramatically increases. If you run out of RAM, your computer may begin to use swap, which is essentially RAM simulated on your hard drive. Generally when you hit swap your performance is so dead that you may think that your computation froze, but if you check your resource use you will notice that it's actually just filled the RAM and starting to use the swap.

But think of it as O(n) with a large constant factor. This means that for operations which only touch the data once, heap allocations can dominate the computational cost:

using LinearAlgebra, BenchmarkTools function alloc_timer(n) A = rand(n,n) B = rand(n,n) C = rand(n,n) t1 = @belapsed $A .* $B t2 = @belapsed ($C .= $A .* $B) t1,t2 end ns = 2 .^ (2:11) res = [alloc_timer(n) for n in ns] alloc = [x[1] for x in res] noalloc = [x[2] for x in res] using Plots plot(ns,alloc,label="=",xscale=:log10,yscale=:log10,legend=:bottomright, title="Micro-optimizations matter for BLAS1") plot!(ns,noalloc,label=".=")

However, when the computation takes O(n^3), like in matrix multiplications, the high constant factor only comes into play when the matrices are sufficiently small:

using LinearAlgebra, BenchmarkTools function alloc_timer(n) A = rand(n,n) B = rand(n,n) C = rand(n,n) t1 = @belapsed $A*$B t2 = @belapsed mul!($C,$A,$B) t1,t2 end ns = 2 .^ (2:7) res = [alloc_timer(n) for n in ns] alloc = [x[1] for x in res] noalloc = [x[2] for x in res] using Plots plot(ns,alloc,label="*",xscale=:log10,yscale=:log10,legend=:bottomright, title="Micro-optimizations only matter for small matmuls") plot!(ns,noalloc,label="mul!")

Though using a mutating form is never bad and always is a little bit better.

Optimizing Memory Use Summary

Avoid cache misses by reusing values

Iterate along columns

Avoid heap allocations in inner loops

Heap allocations occur when the size of things is not proven at compile-time

Use fused broadcasts (with mutated outputs) to avoid heap allocations

Array vectorization confers no special benefit in Julia because Julia loops are as fast as C or Fortran

Use views instead of slices when applicable

Avoiding heap allocations is most necessary for O(n) algorithms or algorithms with small arrays

Use StaticArrays.jl to avoid heap allocations of small arrays in inner loops

Julia's Type Inference and the Compiler

Many people think Julia is fast because it is JIT compiled. That is simply not true (we've already shown examples where Julia code isn't fast, but it's always JIT compiled!). Instead, the reason why Julia is fast is because the combination of two ideas:

Type inference

Type specialization in functions

These two features naturally give rise to Julia's core design feature: multiple dispatch. Let's break down these pieces.

Type Inference

At the core level of the computer, everything has a type. Some languages are more explicit about said types, while others try to hide the types from the user. A type tells the compiler how to to store and interpret the memory of a value. For example, if the compiled code knows that the value in the register is supposed to be interpreted as a 64-bit floating point number, then it understands that slab of memory like:

Importantly, it will know what to do for function calls. If the code tells it to add two floating point numbers, it will send them as inputs to the Floating Point Unit (FPU) which will give the output.

If the types are not known, then... ? So one cannot actually compute until the types are known, since otherwise it's impossible to interpret the memory. In languages like C, the programmer has to declare the types of variables in the program:

void add(double *a, double *b, double *c, size_t n){

size_t i;

for(i = 0; i < n; ++i) {

c[i] = a[i] + b[i];

}

}The types are known at compile time because the programmer set it in stone. In many interpreted languages Python, types are checked at runtime. For example,

a = 2

b = 4

a + bwhen the addition occurs, the Python interpreter will check the object holding the values and ask it for its types, and use those types to know how to compute the + function. For this reason, the add function in Python is rather complex since it needs to decode and have a version for all primitive types!

Not only is there runtime overhead checks in function calls due to not being explicit about types, there is also a memory overhead since it is impossible to know how much memory a value will take since that's a property of its type. Thus the Python interpreter cannot statically guarantee exact unchanging values for the size that a value would take in the stack, meaning that the variables are not stack-allocated. This means that every number ends up heap-allocated, which hopefully begins to explain why this is not as fast as C.

The solution is Julia is somewhat of a hybrid. The Julia code looks like:

a = 2 b = 4 a + b

6

However, before JIT compilation, Julia runs a type inference algorithm which finds out that A is an Int, and B is an Int. You can then understand that if it can prove that A+B is an Int, then it can propagate all of the types through.

Type Specialization in Functions

Julia is able to propagate type inference through functions because, even if a function is "untyped", Julia will interpret this as a generic function over possible methods, where every method has a concrete type. This means that in Julia, the function:

f(x,y) = x+y

f (generic function with 1 method)

is not what you may think of as a "single function", since given inputs of different types it will actually be a different function. We can see this by examining the LLVM IR (LLVM is Julia's compiler, the IR is the Intermediate Representation, i.e. a platform-independent representation of assembly that lives in LLVM that it knows how to convert into assembly per architecture):

using InteractiveUtils @code_llvm f(2,5)

; Function Signature: f(Int64, Int64)

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `f`

define i64 @julia_f_21198(i64 signext %"x::Int64", i64 signext %"y::Int64")

#0 {

top:

; ┌ @ int.jl:87 within `+`

%0 = add i64 %"y::Int64", %"x::Int64"

ret i64 %0

; └

}

@code_llvm f(2.0,5.0)

; Function Signature: f(Float64, Float64)

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `f`

define double @julia_f_21201(double %"x::Float64", double %"y::Float64") #0

{

top:

; ┌ @ float.jl:491 within `+`

%0 = fadd double %"x::Float64", %"y::Float64"

ret double %0

; └

}

Notice that when f is the function that takes in two Ints, Ints add to give an Int and thus f outputs an Int. When f is the function that takes two Float64s, f returns a Float64. Thus in the code:

function g(x,y) a = 4 b = 2 c = f(x,a) d = f(b,c) f(d,y) end @code_llvm g(2,5)

; Function Signature: g(Int64, Int64)

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `g`

define i64 @julia_g_21205(i64 signext %"x::Int64", i64 signext %"y::Int64")

#0 {

top:

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

6 within `g`

; ┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd

:2 within `f`

; │┌ @ int.jl:87 within `+`

%0 = add i64 %"x::Int64", 6

; └└

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

7 within `g`

; ┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd

:2 within `f`

; │┌ @ int.jl:87 within `+`

%1 = add i64 %0, %"y::Int64"

ret i64 %1

; └└

}

g on two Int inputs is a function that has Ints at every step along the way and spits out an Int. We can use the @code_warntype macro to better see the inference along the steps of the function:

@code_warntype g(2,5)

MethodInstance for g(::Int64, ::Int64) from g(x, y) @ Main ~/work/SciMLBook/SciMLBook/_weave/lecture02/optimizin g.jmd:2 Arguments #self#::Core.Const(Main.g) x::Int64 y::Int64 Locals d::Int64 c::Int64 b::Int64 a::Int64 Body::Int64 1 ─ (a = 4) │ (b = 2) │ %3 = a::Core.Const(4) │ (c = Main.f(x, %3)) │ %5 = b::Core.Const(2) │ %6 = c::Int64 │ (d = Main.f(%5, %6)) │ %8 = d::Int64 │ %9 = Main.f(%8, y)::Int64 └── return %9

What happens on mixtures?

@code_llvm f(2.0,5)

; Function Signature: f(Float64, Int64)

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `f`

define double @julia_f_23405(double %"x::Float64", i64 signext %"y::Int64")

#0 {

top:

; ┌ @ promotion.jl:429 within `+`

; │┌ @ promotion.jl:400 within `promote`

; ││┌ @ promotion.jl:375 within `_promote`

; │││┌ @ number.jl:7 within `convert`

; ││││┌ @ float.jl:239 within `Float64`

%0 = sitofp i64 %"y::Int64" to double

; │└└└└

; │ @ promotion.jl:429 within `+` @ float.jl:491

%1 = fadd double %0, %"x::Float64"

ret double %1

; └

}

When we add an Int to a Float64, we promote the Int to a Float64 and then perform the + between two Float64s. When we go to the full function, we see that it can still infer:

@code_warntype g(2.0,5)

MethodInstance for g(::Float64, ::Int64) from g(x, y) @ Main ~/work/SciMLBook/SciMLBook/_weave/lecture02/optimizin g.jmd:2 Arguments #self#::Core.Const(Main.g) x::Float64 y::Int64 Locals d::Float64 c::Float64 b::Int64 a::Int64 Body::Float64 1 ─ (a = 4) │ (b = 2) │ %3 = a::Core.Const(4) │ (c = Main.f(x, %3)) │ %5 = b::Core.Const(2) │ %6 = c::Float64 │ (d = Main.f(%5, %6)) │ %8 = d::Float64 │ %9 = Main.f(%8, y)::Float64 └── return %9

and it uses this to build a very efficient assembly code because it knows exactly what the types will be at every step:

@code_llvm g(2.0,5)

; Function Signature: g(Float64, Int64)

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `g`

define double @julia_g_23419(double %"x::Float64", i64 signext %"y::Int64")

#0 {

top:

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

5 within `g`

; ┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd

:2 within `f`

; │┌ @ promotion.jl:429 within `+` @ float.jl:491

%0 = fadd double %"x::Float64", 4.000000e+00

; └└

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

6 within `g`

; ┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd

:2 within `f`

; │┌ @ promotion.jl:429 within `+` @ float.jl:491

%1 = fadd double %0, 2.000000e+00

; └└

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

7 within `g`

; ┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd

:2 within `f`

; │┌ @ promotion.jl:429 within `+`

; ││┌ @ promotion.jl:400 within `promote`

; │││┌ @ promotion.jl:375 within `_promote`

; ││││┌ @ number.jl:7 within `convert`

; │││││┌ @ float.jl:239 within `Float64`

%2 = sitofp i64 %"y::Int64" to double

; ││└└└└

; ││ @ promotion.jl:429 within `+` @ float.jl:491

%3 = fadd double %1, %2

ret double %3

; └└

}

(notice how it handles the constant literals 4 and 2: it converted them at compile time to reduce the algorithm to 3 floating point additions).

Type Stability

Why is the inference algorithm able to infer all of the types of g? It's because it knows the types coming out of f at compile time. Given an Int and a Float64, f will always output a Float64, and thus it can continue with inference knowing that c, d, and eventually the output is Float64. Thus in order for this to occur, we need that the type of the output on our function is directly inferred from the type of the input. This property is known as type-stability.

An example of breaking it is as follows:

function h(x,y) out = x + y rand() < 0.5 ? out : Float64(out) end

h (generic function with 1 method)

Here, on an integer input the output's type is randomly either Int or Float64, and thus the output is unknown:

@code_warntype h(2,5)

MethodInstance for h(::Int64, ::Int64)

from h(x, y) @ Main ~/work/SciMLBook/SciMLBook/_weave/lecture02/optimizin

g.jmd:2

Arguments

#self#::Core.Const(Main.h)

x::Int64

y::Int64

Locals

out::Int64

Body::UNION{FLOAT64, INT64}

1 ─ (out = x + y)

│ %2 = Main.:<::Core.Const(<)

│ %3 = Main.rand()::Float64

│ %4 = (%2)(%3, 0.5)::Bool

└── goto #3 if not %4

2 ─ %6 = out::Int64

└── return %6

3 ─ %8 = out::Int64

│ %9 = Main.Float64(%8)::Float64

└── return %9

This means that its output type is Union{Int,Float64} (Julia uses union types to keep the types still somewhat constrained). Once there are multiple choices, those need to get propagate through the compiler, and all subsequent calculations are the result of either being an Int or a Float64.

(Note that Julia has small union optimizations, so if this union is of size 4 or less then Julia will still be able to optimize it quite a bit.)

Multiple Dispatch

The + function on numbers was implemented in Julia, so how were these rules all written down? The answer is multiple dispatch. In Julia, you can tell a function how to act differently on different types by using type assertions on the input values. For example, let's make a function that computes 2x + y on Int and x/y on Float64:

ff(x::Int,y::Int) = 2x + y ff(x::Float64,y::Float64) = x/y @show ff(2,5) @show ff(2.0,5.0)

ff(2, 5) = 9 ff(2.0, 5.0) = 0.4 0.4

The + function in Julia is just defined as +(a,b), and we can actually point to that code in the Julia distribution:

@which +(2.0,5)+(x::Number, y::Number) in Base at promotion.jl:429

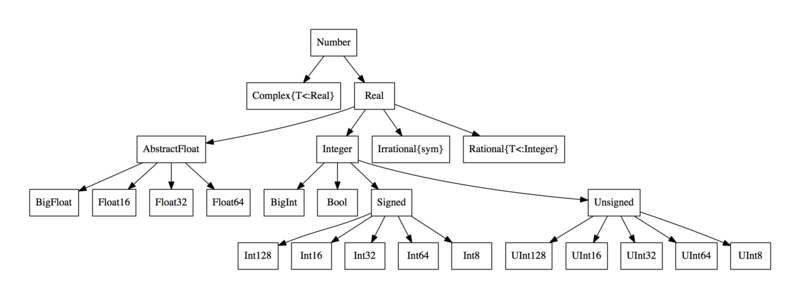

To control at a higher level, Julia uses abstract types. For example, Float64 <: AbstractFloat, meaning Float64s are a subtype of AbstractFloat. We also have that Int <: Integer, while both AbstractFloat <: Number and Integer <: Number.

Julia allows the user to define dispatches at a higher level, and the version that is called is the most strict version that is correct. For example, right now with ff we will get a MethodError if we call it between a Int and a Float64 because no such method exists:

ff(2.0,5)

ERROR: MethodError: no method matching ff(::Float64, ::Int64) The function `ff` exists, but no method is defined for this combination of argument types. Closest candidates are: ff(::Float64, !Matched::Float64) @ Main ~/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:3 ff(!Matched::Int64, ::Int64) @ Main ~/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:2

However, we can add a fallback method to the function ff for two numbers:

ff(x::Number,y::Number) = x + y ff(2.0,5)

7.0

Notice that the fallback method still specializes on the inputs:

@code_llvm ff(2.0,5)

; Function Signature: ff(Float64, Int64)

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `ff`

define double @julia_ff_24263(double %"x::Float64", i64 signext %"y::Int64"

) #0 {

top:

; ┌ @ promotion.jl:429 within `+`

; │┌ @ promotion.jl:400 within `promote`

; ││┌ @ promotion.jl:375 within `_promote`

; │││┌ @ number.jl:7 within `convert`

; ││││┌ @ float.jl:239 within `Float64`

%0 = sitofp i64 %"y::Int64" to double

; │└└└└

; │ @ promotion.jl:429 within `+` @ float.jl:491

%1 = fadd double %0, %"x::Float64"

ret double %1

; └

}

It's essentially just a template for what functions to possibly try and create given the types that are seen. When it sees Float64 and Int, it knows it should try and create the function that does x+y, and once it knows it's Float64 plus a Int, it knows it should create the function that converts the Int to a Float64 and then does addition between two Float64s, and that is precisely the generated LLVM IR on this pair of input types.

And that's essentially Julia's secret sauce: since it's always specializing its types on each function, if those functions themselves can infer the output, then the entire function can be inferred and generate optimal code, which is then optimized by the compiler and out comes an efficient function. If types can't be inferred, Julia falls back to a slower "Python" mode (though with optimizations in cases like small unions). Users then get control over this specialization process through multiple dispatch, which is then Julia's core feature since it allows adding new options without any runtime cost.

Any Fallbacks

Note that f(x,y) = x+y is equivalent to f(x::Any,y::Any) = x+y, where Any is the maximal supertype of every Julia type. Thus f(x,y) = x+y is essentially a fallback for all possible input values, telling it what to do in the case that no other dispatches exist. However, note that this dispatch itself is not slow, since it will be specialized on the input types.

Ambiguities

The version that is called is the most strict version that is correct. What happens if it's impossible to define "the most strict version"? For example,

ff(x::Float64,y::Number) = 5x + 2y ff(x::Number,y::Int) = x - y

ff (generic function with 5 methods)

What should it call on f(2.0,5) now? ff(x::Float64,y::Number) and ff(x::Number,y::Int) are both more strict than ff(x::Number,y::Number), so one of them should be called, but neither are more strict than each other, and thus you will end up with an ambiguity error:

ff(2.0,5)

ERROR: MethodError: ff(::Float64, ::Int64) is ambiguous.

Candidates:

ff(x::Number, y::Int64)

@ Main ~/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:3

ff(x::Float64, y::Number)

@ Main ~/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:2

Possible fix, define

ff(::Float64, ::Int64)

Untyped Containers

One way to ruin inference is to use an untyped container. For example, the array constructors use type inference themselves to know what their container type will be. Therefore,

a = [1.0,2.0,3.0]

3-element Vector{Float64}:

1.0

2.0

3.0

uses type inference on its inputs to know that it should be something that holds Float64 values, and thus it is a 1-dimensional array of Float64 values, or Array{Float64,1}. The accesses:

a[1]

1.0

are then inferred, since this is just the function getindex(a::Array{T},i) where T which is a function that will produce something of type T, the element type of the array. However, if we tell Julia to make an array with element type Any:

b = ["1.0",2,2.0]

3-element Vector{Any}:

"1.0"

2

2.0

(here, Julia falls back to Any because it cannot promote the values to the same type), then the best inference can do on the output is to say it could have any type:

function bad_container(a) a[2] end @code_warntype bad_container(a)

MethodInstance for bad_container(::Vector{Float64})

from bad_container(a) @ Main ~/work/SciMLBook/SciMLBook/_weave/lecture02/

optimizing.jmd:2

Arguments

#self#::Core.Const(Main.bad_container)

a::Vector{Float64}

Body::Float64

1 ─ %1 = Base.getindex(a, 2)::Float64

└── return %1

@code_warntype bad_container(b)

MethodInstance for bad_container(::Vector{Any})

from bad_container(a) @ Main ~/work/SciMLBook/SciMLBook/_weave/lecture02/

optimizing.jmd:2

Arguments

#self#::Core.Const(Main.bad_container)

a::Vector{Any}

Body::ANY

1 ─ %1 = Base.getindex(a, 2)::ANY

└── return %1

This is one common way that type inference can breakdown. For example, even if the array is all numbers, we can still break inference:

x = Number[1.0,3] function q(x) a = 4 b = 2 c = f(x[1],a) d = f(b,c) f(d,x[2]) end @code_warntype q(x)

MethodInstance for q(::Vector{Number})

from q(x) @ Main ~/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.j

md:3

Arguments

#self#::Core.Const(Main.q)

x::Vector{Number}

Locals

d::ANY

c::ANY

b::Int64

a::Int64

Body::ANY

1 ─ (a = 4)

│ (b = 2)

│ %3 = Main.f::Core.Const(Main.f)

│ %4 = Base.getindex(x, 1)::NUMBER

│ %5 = a::Core.Const(4)

│ (c = (%3)(%4, %5))

│ %7 = b::Core.Const(2)

│ %8 = c::ANY

│ (d = Main.f(%7, %8))

│ %10 = Main.f::Core.Const(Main.f)

│ %11 = d::ANY

│ %12 = Base.getindex(x, 2)::NUMBER

│ %13 = (%10)(%11, %12)::ANY

└── return %13

Here the type inference algorithm quickly gives up and infers to Any, losing all specialization and automatically switching to Python-style runtime type checking.

Type definitions

Value types and isbits

In Julia, types which can fully inferred and which are composed of primitive or isbits types are value types. This means that, inside of an array, their values are the values of the type itself, and not a pointer to the values.

You can check if the type is a value type through isbits:

isbits(1.0)

true

Note that a Julia struct which holds isbits values is isbits as well, if it's fully inferred:

struct MyComplex real::Float64 imag::Float64 end isbits(MyComplex(1.0,1.0))

true

We can see that the compiler knows how to use this efficiently since it knows that what comes out is always Float64:

Base.:+(a::MyComplex,b::MyComplex) = MyComplex(a.real+b.real,a.imag+b.imag) Base.:+(a::MyComplex,b::Int) = MyComplex(a.real+b,a.imag) Base.:+(b::Int,a::MyComplex) = MyComplex(a.real+b,a.imag) g(MyComplex(1.0,1.0),MyComplex(1.0,1.0))

MyComplex(8.0, 2.0)

@code_warntype g(MyComplex(1.0,1.0),MyComplex(1.0,1.0))

MethodInstance for g(::MyComplex, ::MyComplex) from g(x, y) @ Main ~/work/SciMLBook/SciMLBook/_weave/lecture02/optimizin g.jmd:2 Arguments #self#::Core.Const(Main.g) x::MyComplex y::MyComplex Locals d::MyComplex c::MyComplex b::Int64 a::Int64 Body::MyComplex 1 ─ (a = 4) │ (b = 2) │ %3 = a::Core.Const(4) │ (c = Main.f(x, %3)) │ %5 = b::Core.Const(2) │ %6 = c::MyComplex │ (d = Main.f(%5, %6)) │ %8 = d::MyComplex │ %9 = Main.f(%8, y)::MyComplex └── return %9

@code_llvm g(MyComplex(1.0,1.0),MyComplex(1.0,1.0))

; Function Signature: g(Main.MyComplex, Main.MyComplex)

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `g`

define void @julia_g_25180(ptr noalias nocapture noundef nonnull sret([2 x

double]) align 8 dereferenceable(16) %sret_return, ptr nocapture noundef no

nnull readonly align 8 dereferenceable(16) %"x::MyComplex", ptr nocapture n

oundef nonnull readonly align 8 dereferenceable(16) %"y::MyComplex") #0 {

top:

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

5 within `g`

; ┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd

:2 within `f`

; │┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jm

d:3 within `+` @ promotion.jl:429 @ float.jl:491

%"x::MyComplex.real_ptr.unbox" = load double, ptr %"x::MyComplex", alig

n 8

%0 = fadd double %"x::MyComplex.real_ptr.unbox", 4.000000e+00

; ││ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jm

d:3 within `+`

; ││┌ @ Base.jl:49 within `getproperty`

%"x::MyComplex.imag_ptr" = getelementptr inbounds [2 x double], ptr %"

x::MyComplex", i64 0, i64 1

; └└└

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

6 within `g`

; ┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd

:2 within `f`

; │┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jm

d:4 within `+` @ promotion.jl:429 @ float.jl:491

%1 = fadd double %0, 2.000000e+00

; └└

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

7 within `g`

; ┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd

:2 within `f`

; │┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jm

d:2 within `+` @ float.jl:491

%"x::MyComplex.imag_ptr.unbox" = load double, ptr %"x::MyComplex.imag_p

tr", align 8

%2 = load <2 x double>, ptr %"y::MyComplex", align 8

%3 = insertelement <2 x double> poison, double %1, i64 0

%4 = insertelement <2 x double> %3, double %"x::MyComplex.imag_ptr.unbo

x", i64 1

%5 = fadd <2 x double> %2, %4

; ││ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jm

d:2 within `+`

; ││┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.j

md:3 within `MyComplex`

store <2 x double> %5, ptr %sret_return, align 8

ret void

; └└└

}

Note that the compiled code simply works directly on the double pieces. We can also make this be concrete without pre-specifying that the values always have to be Float64 by using a type parameter.

struct MyParameterizedComplex{T} real::T imag::T end isbits(MyParameterizedComplex(1.0,1.0))

true

Note that MyParameterizedComplex{T} is a concrete type for every T: it is a shorthand form for defining a whole family of types.

Base.:+(a::MyParameterizedComplex,b::MyParameterizedComplex) = MyParameterizedComplex(a.real+b.real,a.imag+b.imag) Base.:+(a::MyParameterizedComplex,b::Int) = MyParameterizedComplex(a.real+b,a.imag) Base.:+(b::Int,a::MyParameterizedComplex) = MyParameterizedComplex(a.real+b,a.imag) g(MyParameterizedComplex(1.0,1.0),MyParameterizedComplex(1.0,1.0))

MyParameterizedComplex{Float64}(8.0, 2.0)

@code_warntype g(MyParameterizedComplex(1.0,1.0),MyParameterizedComplex(1.0,1.0))

MethodInstance for g(::MyParameterizedComplex{Float64}, ::MyParameterizedCo

mplex{Float64})

from g(x, y) @ Main ~/work/SciMLBook/SciMLBook/_weave/lecture02/optimizin

g.jmd:2

Arguments

#self#::Core.Const(Main.g)

x::MyParameterizedComplex{Float64}

y::MyParameterizedComplex{Float64}

Locals

d::MyParameterizedComplex{Float64}

c::MyParameterizedComplex{Float64}

b::Int64

a::Int64

Body::MyParameterizedComplex{Float64}

1 ─ (a = 4)

│ (b = 2)

│ %3 = a::Core.Const(4)

│ (c = Main.f(x, %3))

│ %5 = b::Core.Const(2)

│ %6 = c::MyParameterizedComplex{Float64}

│ (d = Main.f(%5, %6))

│ %8 = d::MyParameterizedComplex{Float64}

│ %9 = Main.f(%8, y)::MyParameterizedComplex{Float64}

└── return %9

See that this code also automatically works and compiles efficiently for Float32 as well:

@code_warntype g(MyParameterizedComplex(1.0f0,1.0f0),MyParameterizedComplex(1.0f0,1.0f0))

MethodInstance for g(::MyParameterizedComplex{Float32}, ::MyParameterizedCo

mplex{Float32})

from g(x, y) @ Main ~/work/SciMLBook/SciMLBook/_weave/lecture02/optimizin

g.jmd:2

Arguments

#self#::Core.Const(Main.g)

x::MyParameterizedComplex{Float32}

y::MyParameterizedComplex{Float32}

Locals

d::MyParameterizedComplex{Float32}

c::MyParameterizedComplex{Float32}

b::Int64

a::Int64

Body::MyParameterizedComplex{Float32}

1 ─ (a = 4)

│ (b = 2)

│ %3 = a::Core.Const(4)

│ (c = Main.f(x, %3))

│ %5 = b::Core.Const(2)

│ %6 = c::MyParameterizedComplex{Float32}

│ (d = Main.f(%5, %6))

│ %8 = d::MyParameterizedComplex{Float32}

│ %9 = Main.f(%8, y)::MyParameterizedComplex{Float32}

└── return %9

@code_llvm g(MyParameterizedComplex(1.0f0,1.0f0),MyParameterizedComplex(1.0f0,1.0f0))

; Function Signature: g(Main.MyParameterizedComplex{Float32}, Main.MyParame

terizedComplex{Float32})

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `g`

define [2 x float] @julia_g_25256(ptr nocapture noundef nonnull readonly al

ign 4 dereferenceable(8) %"x::MyParameterizedComplex", ptr nocapture nounde

f nonnull readonly align 4 dereferenceable(8) %"y::MyParameterizedComplex")

#0 {

top:

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

5 within `g`

; ┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd

:2 within `f`

; │┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jm

d:3 within `+` @ promotion.jl:429 @ float.jl:491

%"x::MyParameterizedComplex.real_ptr.unbox" = load float, ptr %"x::MyPa

rameterizedComplex", align 4

%0 = fadd float %"x::MyParameterizedComplex.real_ptr.unbox", 4.000000e+

00

; ││ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jm

d:3 within `+`

; ││┌ @ Base.jl:49 within `getproperty`

%"x::MyParameterizedComplex.imag_ptr" = getelementptr inbounds [2 x fl

oat], ptr %"x::MyParameterizedComplex", i64 0, i64 1

; └└└

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

6 within `g`

; ┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd

:2 within `f`

; │┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jm

d:4 within `+` @ promotion.jl:429 @ float.jl:491

%1 = fadd float %0, 2.000000e+00

; └└

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

7 within `g`

; ┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd

:2 within `f`

; │┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jm

d:2 within `+` @ float.jl:491

%"y::MyParameterizedComplex.real_ptr.unbox" = load float, ptr %"y::MyPa

rameterizedComplex", align 4

%2 = fadd float %"y::MyParameterizedComplex.real_ptr.unbox", %1

; ││ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jm

d:2 within `+`

; ││┌ @ Base.jl:49 within `getproperty`

%"y::MyParameterizedComplex.imag_ptr" = getelementptr inbounds [2 x fl

oat], ptr %"y::MyParameterizedComplex", i64 0, i64 1

; ││└

; ││ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jm

d:2 within `+` @ float.jl:491

%"x::MyParameterizedComplex.imag_ptr.unbox" = load float, ptr %"x::MyPa

rameterizedComplex.imag_ptr", align 4

%"y::MyParameterizedComplex.imag_ptr.unbox" = load float, ptr %"y::MyPa

rameterizedComplex.imag_ptr", align 4

%3 = fadd float %"x::MyParameterizedComplex.imag_ptr.unbox", %"y::MyPar

ameterizedComplex.imag_ptr.unbox"

; ││ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jm

d:2 within `+`

; ││┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.j

md:3 within `MyParameterizedComplex`

%"new::MyParameterizedComplex.unbox.fca.0.insert" = insertvalue [2 x f

loat] zeroinitializer, float %2, 0

%"new::MyParameterizedComplex.unbox.fca.1.insert" = insertvalue [2 x f

loat] %"new::MyParameterizedComplex.unbox.fca.0.insert", float %3, 1

ret [2 x float] %"new::MyParameterizedComplex.unbox.fca.1.insert"

; └└└

}

It is important to know that if there is any piece of a type which doesn't contain type information, then it cannot be isbits because then it would have to be compiled in such a way that the size is not known in advance. For example:

struct MySlowComplex real imag end isbits(MySlowComplex(1.0,1.0))

false

Base.:+(a::MySlowComplex,b::MySlowComplex) = MySlowComplex(a.real+b.real,a.imag+b.imag) Base.:+(a::MySlowComplex,b::Int) = MySlowComplex(a.real+b,a.imag) Base.:+(b::Int,a::MySlowComplex) = MySlowComplex(a.real+b,a.imag) g(MySlowComplex(1.0,1.0),MySlowComplex(1.0,1.0))

MySlowComplex(8.0, 2.0)

@code_warntype g(MySlowComplex(1.0,1.0),MySlowComplex(1.0,1.0))

MethodInstance for g(::MySlowComplex, ::MySlowComplex) from g(x, y) @ Main ~/work/SciMLBook/SciMLBook/_weave/lecture02/optimizin g.jmd:2 Arguments #self#::Core.Const(Main.g) x::MySlowComplex y::MySlowComplex Locals d::MySlowComplex c::MySlowComplex b::Int64 a::Int64 Body::MySlowComplex 1 ─ (a = 4) │ (b = 2) │ %3 = a::Core.Const(4) │ (c = Main.f(x, %3)) │ %5 = b::Core.Const(2) │ %6 = c::MySlowComplex │ (d = Main.f(%5, %6)) │ %8 = d::MySlowComplex │ %9 = Main.f(%8, y)::MySlowComplex └── return %9

@code_llvm g(MySlowComplex(1.0,1.0),MySlowComplex(1.0,1.0))

; Function Signature: g(Main.MySlowComplex, Main.MySlowComplex)

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `g`

define void @julia_g_25341(ptr noalias nocapture noundef nonnull sret([2 x

ptr]) align 8 dereferenceable(16) %sret_return, ptr nocapture noundef nonnu

ll readonly align 8 dereferenceable(16) %"x::MySlowComplex", ptr nocapture

noundef nonnull readonly align 8 dereferenceable(16) %"y::MySlowComplex") #

0 {

top:

%gcframe1 = alloca [8 x ptr], align 16

call void @llvm.memset.p0.i64(ptr align 16 %gcframe1, i8 0, i64 64, i1 tr

ue)

%0 = getelementptr inbounds ptr, ptr %gcframe1, i64 6

%1 = getelementptr inbounds ptr, ptr %gcframe1, i64 4

%2 = getelementptr inbounds ptr, ptr %gcframe1, i64 2

%thread_ptr = call ptr asm "movq %fs:0, $0", "=r"() #13

%tls_ppgcstack = getelementptr i8, ptr %thread_ptr, i64 -8

%tls_pgcstack = load ptr, ptr %tls_ppgcstack, align 8

store i64 24, ptr %gcframe1, align 16

%frame.prev = getelementptr inbounds ptr, ptr %gcframe1, i64 1

%task.gcstack = load ptr, ptr %tls_pgcstack, align 8

store ptr %task.gcstack, ptr %frame.prev, align 8

store ptr %gcframe1, ptr %tls_pgcstack, align 8

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

5 within `g`

; ┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd

:2 within `f`

call void @"j_+_25345"(ptr noalias nocapture noundef nonnull sret([2 x p

tr]) %2, ptr nocapture nonnull readonly %"x::MySlowComplex", i64 signext 4)

; └

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

6 within `g`

; ┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd

:2 within `f`

call void @"j_+_25346"(ptr noalias nocapture noundef nonnull sret([2 x p

tr]) %1, i64 signext 2, ptr nocapture nonnull readonly %2)

; └

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

7 within `g`

; ┌ @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd

:2 within `f`

call void @"j_+_25347"(ptr noalias nocapture noundef nonnull sret([2 x p

tr]) %0, ptr nocapture nonnull readonly %1, ptr nocapture nonnull readonly

%"y::MySlowComplex")

call void @llvm.memcpy.p0.p0.i64(ptr noundef nonnull align 8 dereference

able(16) %sret_return, ptr noundef nonnull align 16 dereferenceable(16) %0,

i64 16, i1 false)

%frame.prev5 = load ptr, ptr %frame.prev, align 8

store ptr %frame.prev5, ptr %tls_pgcstack, align 8

ret void

; └

}

struct MySlowComplex2 real::AbstractFloat imag::AbstractFloat end isbits(MySlowComplex2(1.0,1.0))

false

Base.:+(a::MySlowComplex2,b::MySlowComplex2) = MySlowComplex2(a.real+b.real,a.imag+b.imag) Base.:+(a::MySlowComplex2,b::Int) = MySlowComplex2(a.real+b,a.imag) Base.:+(b::Int,a::MySlowComplex2) = MySlowComplex2(a.real+b,a.imag) g(MySlowComplex2(1.0,1.0),MySlowComplex2(1.0,1.0))

MySlowComplex2(8.0, 2.0)

Here's the timings:

a = MyComplex(1.0,1.0) b = MyComplex(2.0,1.0) @btime g(a,b)

20.962 ns (1 allocation: 32 bytes) MyComplex(9.0, 2.0)

a = MyParameterizedComplex(1.0,1.0) b = MyParameterizedComplex(2.0,1.0) @btime g(a,b)

21.012 ns (1 allocation: 32 bytes)

MyParameterizedComplex{Float64}(9.0, 2.0)

a = MySlowComplex(1.0,1.0) b = MySlowComplex(2.0,1.0) @btime g(a,b)

105.074 ns (5 allocations: 96 bytes) MySlowComplex(9.0, 2.0)

a = MySlowComplex2(1.0,1.0) b = MySlowComplex2(2.0,1.0) @btime g(a,b)

531.784 ns (14 allocations: 288 bytes) MySlowComplex2(9.0, 2.0)

Note on Julia

Note that, because of these type specialization, value types, etc. properties, the number types, even ones such as Int, Float64, and Complex, are all themselves implemented in pure Julia! Thus even basic pieces can be implemented in Julia with full performance, given one uses the features correctly.

Note on isbits

Note that a type which is mutable struct will not be isbits. This means that mutable structs will be a pointer to a heap allocated object, unless it's shortlived and the compiler can erase its construction. Also, note that isbits compiles down to bit operations from pure Julia, which means that these types can directly compile to GPU kernels through CUDAnative without modification.

Function Barriers

Since functions automatically specialize on their input types in Julia, we can use this to our advantage in order to make an inner loop fully inferred. For example, take the code from above but with a loop:

function r(x) a = 4 b = 2 for i in 1:100 c = f(x[1],a) d = f(b,c) a = f(d,x[2]) end a end @btime r(x)

5.965 μs (300 allocations: 4.69 KiB) 604.0

In here, the loop variables are not inferred and thus this is really slow. However, we can force a function call in the middle to end up with specialization and in the inner loop be stable:

s(x) = _s(x[1],x[2]) function _s(x1,x2) a = 4 b = 2 for i in 1:100 c = f(x1,a) d = f(b,c) a = f(d,x2) end a end @btime s(x)

297.622 ns (1 allocation: 16 bytes) 604.0

Notice that this algorithm still doesn't infer:

@code_warntype s(x)

MethodInstance for s(::Vector{Number})

from s(x) @ Main ~/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.j

md:2

Arguments

#self#::Core.Const(Main.s)

x::Vector{Number}

Body::ANY

1 ─ %1 = Main._s::Core.Const(Main._s)

│ %2 = Base.getindex(x, 1)::NUMBER

│ %3 = Base.getindex(x, 2)::NUMBER

│ %4 = (%1)(%2, %3)::ANY

└── return %4

since the output of _s isn't inferred, but while it's in _s it will have specialized on the fact that x[1] is a Float64 while x[2] is a Int, making that inner loop fast. In fact, it will only need to pay one dynamic dispatch, i.e. a multiple dispatch determination that happens at runtime. Notice that whenever functions are inferred, the dispatching is static since the choice of the dispatch is already made and compiled into the LLVM IR.

Specialization at Compile Time

Julia code will specialize at compile time if it can prove something about the result. For example:

function fff(x) if x isa Int y = 2 else y = 4.0 end x + y end

fff (generic function with 1 method)

You might think this function has a branch, but in reality Julia can determine whether x is an Int or not at compile time, so it will actually compile it away and just turn it into the function x+2 or x+4.0:

@code_llvm fff(5)

; Function Signature: fff(Int64)

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `fff`

define i64 @julia_fff_25804(i64 signext %"x::Int64") #0 {

top:

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

8 within `fff`

; ┌ @ int.jl:87 within `+`

%0 = add i64 %"x::Int64", 2

ret i64 %0

; └

}

@code_llvm fff(2.0)

; Function Signature: fff(Float64)

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `fff`

define double @julia_fff_25807(double %"x::Float64") #0 {

top:

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

8 within `fff`

; ┌ @ float.jl:491 within `+`

%0 = fadd double %"x::Float64", 4.000000e+00

ret double %0

; └

}

Thus one does not need to worry about over-optimizing since in the obvious cases the compiler will actually remove all of the extra pieces when it can!

Global Scope and Optimizations

This discussion shows how Julia's optimizations all apply during function specialization times. Thus calling Julia functions is fast. But what about when doing something outside of the function, like directly in a module or in the REPL?

@btime for j in 1:100, i in 1:100 global A,B,C C[i,j] = A[i,j] + B[i,j] end

809.163 μs (30000 allocations: 468.75 KiB)

This is very slow because the types of A, B, and C cannot be inferred. Why can't they be inferred? Well, at any time in the dynamic REPL scope I can do something like C = "haha now a string!", and thus it cannot specialize on the types currently existing in the REPL (since asynchronous changes could also occur), and therefore it defaults back to doing a type check at every single function which slows it down. Moral of the story, Julia functions are fast but its global scope is too dynamic to be optimized.

Summary

Julia is not fast because of its JIT, it's fast because of function specialization and type inference

Type stable functions allow inference to fully occur

Multiple dispatch works within the function specialization mechanism to create overhead-free compile time controls

Julia will specialize the generic functions

Making sure values are concretely typed in inner loops is essential for performance

Overheads of Individual Operations

Now let's dig even a little deeper. Everything the processor does has a cost. A great chart to keep in mind is this classic one. A few things should immediately jump out to you:

Simple arithmetic, like floating point additions, are super cheap. ~1 clock cycle, or a few nanoseconds.

Processors do branch prediction on

ifstatements. If the code goes down the predicted route, theifstatement costs ~1-2 clock cycles. If it goes down the wrong route, then it will take ~10-20 clock cycles. This means that predictable branches, like ones with clear patterns or usually the same output, are much cheaper (almost free) than unpredictable branches.Function calls are expensive: 15-60 clock cycles!

RAM reads are very expensive, with lower caches less expensive.

Bounds Checking

Let's check the LLVM IR on one of our earlier loops:

function inner_noalloc!(C,A,B) for j in 1:100, i in 1:100 val = A[i,j] + B[i,j] C[i,j] = val[1] end end @code_llvm inner_noalloc!(C,A,B)

; Function Signature: inner_noalloc!(Array{Float64, 2}, Array{Float64, 2},

Array{Float64, 2})

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `inner_noalloc!`

define nonnull ptr @"japi1_inner_noalloc!_25871"(ptr %"function::Core.Funct

ion", ptr noalias nocapture noundef readonly %"args::Any[]", i32 %"nargs::U

Int32") #0 {

top:

%stackargs = alloca ptr, align 8

store volatile ptr %"args::Any[]", ptr %stackargs, align 8

%"new::Tuple" = alloca [2 x i64], align 8

%"new::Tuple33" = alloca [2 x i64], align 8

%"new::Tuple76" = alloca [2 x i64], align 8

%0 = load ptr, ptr %"args::Any[]", align 8

%1 = getelementptr inbounds ptr, ptr %"args::Any[]", i64 1

%2 = load ptr, ptr %1, align 8

%3 = getelementptr inbounds ptr, ptr %"args::Any[]", i64 2

%4 = load ptr, ptr %3, align 8

%5 = getelementptr inbounds i8, ptr %2, i64 16

%.size.sroa.0.0.copyload = load i64, ptr %5, align 8

%.size.sroa.2.0..sroa_idx = getelementptr inbounds i8, ptr %2, i64 24

%.size.sroa.2.0.copyload = load i64, ptr %.size.sroa.2.0..sroa_idx, align

8

%6 = getelementptr inbounds i8, ptr %4, i64 16

%.size35.sroa.2.0..sroa_idx = getelementptr inbounds i8, ptr %4, i64 24

%7 = getelementptr inbounds i8, ptr %0, i64 16

%.size78.sroa.2.0..sroa_idx = getelementptr inbounds i8, ptr %0, i64 24

%8 = add i64 %.size.sroa.0.0.copyload, 1

%9 = add i64 %.size.sroa.0.0.copyload, -1

%10 = shl i64 %.size.sroa.0.0.copyload, 3

br label %L2

L2: ; preds = %L178, %top

%indvar = phi i64 [ %indvar.next, %L178 ], [ 0, %top ]

%value_phi = phi i64 [ %70, %L178 ], [ 1, %top ]

%11 = shl nuw nsw i64 %indvar, 3

%12 = mul i64 %10, %indvar

%13 = add i64 %12, 8

%14 = add i64 %12, 16

%15 = add nsw i64 %value_phi, -1

%16 = icmp uge i64 %15, %.size.sroa.2.0.copyload

%17 = mul i64 %.size.sroa.0.0.copyload, %15

%18 = load ptr, ptr %2, align 8

%.size35.sroa.0.0.copyload = load i64, ptr %6, align 8

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

3 within `inner_noalloc!`

%.size35.sroa.0.0.copyload.fr = freeze i64 %.size35.sroa.0.0.copyload

%19 = mul i64 %.size35.sroa.0.0.copyload.fr, %15

%20 = load ptr, ptr %4, align 8

%.size78.sroa.0.0.copyload = load i64, ptr %7, align 8

%.size78.sroa.0.0.copyload.fr = freeze i64 %.size78.sroa.0.0.copyload

%.size78.sroa.2.0.copyload = load i64, ptr %.size78.sroa.2.0..sroa_idx, a

lign 8

%21 = icmp uge i64 %15, %.size78.sroa.2.0.copyload

%22 = load ptr, ptr %0, align 8

%23 = mul i64 %.size78.sroa.0.0.copyload.fr, %15

%.fr = freeze i1 %16

br i1 %.fr, label %L31, label %L2.split

L2.split: ; preds = %L2

%.size35.sroa.2.0.copyload = load i64, ptr %.size35.sroa.2.0..sroa_idx, a

lign 8

%24 = icmp uge i64 %15, %.size35.sroa.2.0.copyload

%.fr240 = freeze i1 %24

br i1 %.fr240, label %L2.split.split.us, label %L2.split.split

L2.split.split.us: ; preds = %L2.split

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

4 within `inner_noalloc!`

; ┌ @ array.jl:929 within `getindex`

; │┌ @ abstractarray.jl:699 within `checkbounds` @ abstractarray.jl:681

; ││┌ @ abstractarray.jl:725 within `checkbounds_indices`

; │││┌ @ abstractarray.jl:754 within `checkindex`

; ││││┌ @ int.jl:513 within `<`

%25 = icmp eq i64 %.size.sroa.0.0.copyload, 0

; ││└└└

; ││ @ abstractarray.jl:699 within `checkbounds`

br i1 %25, label %L31, label %L88

L2.split.split: ; preds = %L2.split

%.fr359 = freeze i1 %21

br i1 %.fr359, label %L2.split.split.split.us, label %L2.split.split.sp

lit

L2.split.split.split.us: ; preds = %L2.split.split

; ││ @ abstractarray.jl:699 within `checkbounds` @ abstractarray.jl:681

; ││┌ @ abstractarray.jl:725 within `checkbounds_indices`

; │││┌ @ abstractarray.jl:754 within `checkindex`

; ││││┌ @ int.jl:513 within `<`

%26 = icmp eq i64 %.size.sroa.0.0.copyload, 0

; ││└└└

; ││ @ abstractarray.jl:699 within `checkbounds`

br i1 %26, label %L31, label %L34.us313

L34.us313: ; preds = %L2.split.split

.split.us

; ││ @ abstractarray.jl:699 within `checkbounds` @ abstractarray.jl:681

; ││┌ @ abstractarray.jl:725 within `checkbounds_indices`

; │││┌ @ abstractarray.jl:754 within `checkindex`

; ││││┌ @ int.jl:513 within `<`

%27 = icmp eq i64 %.size35.sroa.0.0.copyload.fr, 0

; ││└└└

; ││ @ abstractarray.jl:699 within `checkbounds`

br i1 %27, label %L88, label %L150

L2.split.split.split: ; preds = %L2.split.split

; └└

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

3 within `inner_noalloc!`

%28 = add i64 %.size35.sroa.0.0.copyload.fr, 1

%29 = add i64 %.size78.sroa.0.0.copyload.fr, 1

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

4 within `inner_noalloc!`

; ┌ @ array.jl:929 within `getindex`

; │┌ @ abstractarray.jl:699 within `checkbounds` @ abstractarray.jl:681

; ││┌ @ abstractarray.jl:725 within `checkbounds_indices`

; │││┌ @ abstractarray.jl:754 within `checkindex`

; ││││┌ @ int.jl:513 within `<`

%exitcond.peel = icmp eq i64 %.size.sroa.0.0.copyload, 0

; ││└└└

; ││ @ abstractarray.jl:699 within `checkbounds`

br i1 %exitcond.peel, label %L31, label %L34.peel

L34.peel: ; preds = %L2.split.split

.split

; │└

; │ @ array.jl:930 within `getindex` @ essentials.jl:917

%30 = getelementptr inbounds double, ptr %18, i64 %17

%31 = load double, ptr %30, align 8

; │ @ array.jl:929 within `getindex`

; │┌ @ abstractarray.jl:699 within `checkbounds` @ abstractarray.jl:681

; ││┌ @ abstractarray.jl:725 within `checkbounds_indices`

; │││┌ @ abstractarray.jl:754 within `checkindex`

; ││││┌ @ int.jl:513 within `<`

%exitcond499.peel = icmp eq i64 %.size35.sroa.0.0.copyload.fr, 0

; ││└└└

; ││ @ abstractarray.jl:699 within `checkbounds`

br i1 %exitcond499.peel, label %L88, label %L91.peel

L91.peel: ; preds = %L34.peel

; └└

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

5 within `inner_noalloc!`

; ┌ @ array.jl:993 within `setindex!`

; │┌ @ abstractarray.jl:699 within `checkbounds` @ abstractarray.jl:681

; ││┌ @ abstractarray.jl:725 within `checkbounds_indices`

; │││┌ @ abstractarray.jl:754 within `checkindex`

; ││││┌ @ int.jl:513 within `<`

%exitcond500.peel = icmp eq i64 %.size78.sroa.0.0.copyload.fr, 0

; ││└└└

; ││ @ abstractarray.jl:699 within `checkbounds`

br i1 %exitcond500.peel, label %L150, label %L4.peel.next

L4.peel.next: ; preds = %L91.peel

; └└

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

4 within `inner_noalloc!`

; ┌ @ array.jl:930 within `getindex` @ essentials.jl:917

%32 = getelementptr inbounds double, ptr %20, i64 %19

%33 = load double, ptr %32, align 8

; └

; ┌ @ float.jl:491 within `+`

%34 = fadd double %31, %33

; └

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

5 within `inner_noalloc!`

; ┌ @ array.jl:994 within `setindex!`

%35 = getelementptr inbounds double, ptr %22, i64 %23

store double %34, ptr %35, align 8

; └

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

3 within `inner_noalloc!`

%36 = add i64 %.size78.sroa.0.0.copyload.fr, -1

%37 = add i64 %.size35.sroa.0.0.copyload.fr, -1

%umin653 = call i64 @llvm.umin.i64(i64 %36, i64 %37)

%umin654 = call i64 @llvm.umin.i64(i64 %umin653, i64 %9)

%umin655 = call i64 @llvm.umin.i64(i64 %umin654, i64 98)

%38 = add nuw nsw i64 %umin655, 1

%min.iters.check = icmp ult i64 %umin655, 16

br i1 %min.iters.check, label %scalar.ph, label %vector.memcheck

vector.memcheck: ; preds = %L4.peel.next

%uglygep = getelementptr i8, ptr %22, i64 8

%39 = mul i64 %.size78.sroa.0.0.copyload.fr, %11

%uglygep639 = getelementptr i8, ptr %uglygep, i64 %39

%uglygep640 = getelementptr i8, ptr %22, i64 16

%40 = shl nuw nsw i64 %umin655, 3

%41 = add i64 %39, %40

%uglygep643 = getelementptr i8, ptr %uglygep640, i64 %41

%uglygep644 = getelementptr i8, ptr %18, i64 %13

%42 = add i64 %14, %40

%uglygep645 = getelementptr i8, ptr %18, i64 %42

%uglygep646 = getelementptr i8, ptr %20, i64 8

%43 = mul i64 %.size35.sroa.0.0.copyload.fr, %11

%uglygep647 = getelementptr i8, ptr %uglygep646, i64 %43

%uglygep648 = getelementptr i8, ptr %20, i64 16

%44 = add i64 %43, %40

%uglygep649 = getelementptr i8, ptr %uglygep648, i64 %44

%bound0 = icmp ult ptr %uglygep639, %uglygep645

%bound1 = icmp ult ptr %uglygep644, %uglygep643

%found.conflict = and i1 %bound0, %bound1

%bound0650 = icmp ult ptr %uglygep639, %uglygep649

%bound1651 = icmp ult ptr %uglygep647, %uglygep643

%found.conflict652 = and i1 %bound0650, %bound1651

%conflict.rdx = or i1 %found.conflict, %found.conflict652

br i1 %conflict.rdx, label %scalar.ph, label %vector.ph

vector.ph: ; preds = %vector.memchec

k

%n.mod.vf = and i64 %38, 3

%45 = icmp eq i64 %n.mod.vf, 0

%46 = select i1 %45, i64 4, i64 %n.mod.vf

%n.vec = sub nsw i64 %38, %46

%ind.end = add nsw i64 %n.vec, 2

br label %vector.body

vector.body: ; preds = %vector.body, %

vector.ph

%index = phi i64 [ 0, %vector.ph ], [ %index.next, %vector.body ]

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

4 within `inner_noalloc!`

; ┌ @ array.jl:929 within `getindex`

; │┌ @ abstractarray.jl:699 within `checkbounds` @ abstractarray.jl:681

; ││┌ @ abstractarray.jl:725 within `checkbounds_indices`

; │││┌ @ abstractarray.jl:754 within `checkindex`

; ││││┌ @ int.jl:86 within `-`

%47 = or i64 %index, 1

; │└└└└

; │ @ array.jl:930 within `getindex` @ essentials.jl:917

%48 = add i64 %47, %17

%49 = getelementptr inbounds double, ptr %18, i64 %48

%wide.load = load <4 x double>, ptr %49, align 8

%50 = add i64 %47, %19

%51 = getelementptr inbounds double, ptr %20, i64 %50

%wide.load656 = load <4 x double>, ptr %51, align 8

; └

; ┌ @ float.jl:491 within `+`

%52 = fadd <4 x double> %wide.load, %wide.load656

; └

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

5 within `inner_noalloc!`

; ┌ @ array.jl:994 within `setindex!`

%53 = add i64 %47, %23

%54 = getelementptr inbounds double, ptr %22, i64 %53

store <4 x double> %52, ptr %54, align 8

%index.next = add nuw i64 %index, 4

%55 = icmp eq i64 %index.next, %n.vec

br i1 %55, label %scalar.ph, label %vector.body

scalar.ph: ; preds = %vector.body, %

vector.memcheck, %L4.peel.next

%bc.resume.val = phi i64 [ 2, %L4.peel.next ], [ 2, %vector.memcheck ],

[ %ind.end, %vector.body ]

; └

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

3 within `inner_noalloc!`

br label %L4

L4: ; preds = %L153, %scalar.

ph

%value_phi2 = phi i64 [ %bc.resume.val, %scalar.ph ], [ %69, %L153 ]

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

4 within `inner_noalloc!`

; ┌ @ array.jl:929 within `getindex`

; │┌ @ abstractarray.jl:699 within `checkbounds` @ abstractarray.jl:681

; ││┌ @ abstractarray.jl:725 within `checkbounds_indices`

; │││┌ @ abstractarray.jl:754 within `checkindex`

; ││││┌ @ int.jl:86 within `-`

%56 = add nsw i64 %value_phi2, -1

; ││││└

; ││││┌ @ int.jl:513 within `<`

%exitcond = icmp eq i64 %value_phi2, %8

; ││└└└

; ││ @ abstractarray.jl:699 within `checkbounds`

br i1 %exitcond, label %L31, label %L34

L31: ; preds = %L4, %L2.split.

split.split, %L2.split.split.split.us, %L2.split.split.us, %L2

%.us-phi170 = phi i64 [ 1, %L2.split.split.us ], [ 1, %L2.split.split.s

plit.us ], [ %8, %L4 ], [ 1, %L2 ], [ %8, %L2.split.split.split ]

%57 = getelementptr inbounds [2 x i64], ptr %"new::Tuple", i64 0, i64 1

; ││ @ abstractarray.jl:697 within `checkbounds`

store i64 %.us-phi170, ptr %"new::Tuple", align 8

store i64 %value_phi, ptr %57, align 8

; ││ @ abstractarray.jl:699 within `checkbounds`

call void @j_throw_boundserror_25888(ptr nonnull %2, ptr nocapture nonn

ull readonly %"new::Tuple") #7

unreachable

L34: ; preds = %L4

; │└

; │ @ array.jl:930 within `getindex` @ essentials.jl:917

%58 = add i64 %56, %17

%59 = getelementptr inbounds double, ptr %18, i64 %58

%60 = load double, ptr %59, align 8

; │ @ array.jl:929 within `getindex`

; │┌ @ abstractarray.jl:699 within `checkbounds` @ abstractarray.jl:681

; ││┌ @ abstractarray.jl:725 within `checkbounds_indices`

; │││┌ @ abstractarray.jl:754 within `checkindex`

; ││││┌ @ int.jl:513 within `<`

%exitcond499 = icmp eq i64 %value_phi2, %28

; ││└└└

; ││ @ abstractarray.jl:699 within `checkbounds`

br i1 %exitcond499, label %L88, label %L91

L88: ; preds = %L34, %L34.peel

, %L34.us313, %L2.split.split.us

%.us-phi236 = phi i64 [ 1, %L2.split.split.us ], [ 1, %L34.us313 ], [ %

28, %L34 ], [ %28, %L34.peel ]

%61 = getelementptr inbounds [2 x i64], ptr %"new::Tuple33", i64 0, i64

1

; ││ @ abstractarray.jl:697 within `checkbounds`

store i64 %.us-phi236, ptr %"new::Tuple33", align 8

store i64 %value_phi, ptr %61, align 8

; ││ @ abstractarray.jl:699 within `checkbounds`

call void @j_throw_boundserror_25888(ptr nonnull %4, ptr nocapture nonn

ull readonly %"new::Tuple33") #7

unreachable

L91: ; preds = %L34

; └└

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

5 within `inner_noalloc!`

; ┌ @ array.jl:993 within `setindex!`

; │┌ @ abstractarray.jl:699 within `checkbounds` @ abstractarray.jl:681

; ││┌ @ abstractarray.jl:725 within `checkbounds_indices`

; │││┌ @ abstractarray.jl:754 within `checkindex`

; ││││┌ @ int.jl:513 within `<`

%exitcond500 = icmp eq i64 %value_phi2, %29

; ││└└└

; ││ @ abstractarray.jl:699 within `checkbounds`

br i1 %exitcond500, label %L150, label %L153

L150: ; preds = %L91, %L91.peel

, %L34.us313

%.us-phi352 = phi i64 [ 1, %L34.us313 ], [ %29, %L91 ], [ %29, %L91.pee

l ]

%62 = getelementptr inbounds [2 x i64], ptr %"new::Tuple76", i64 0, i64

1

; ││ @ abstractarray.jl:697 within `checkbounds`

store i64 %.us-phi352, ptr %"new::Tuple76", align 8

store i64 %value_phi, ptr %62, align 8

; ││ @ abstractarray.jl:699 within `checkbounds`

call void @j_throw_boundserror_25888(ptr nonnull %0, ptr nocapture nonn

ull readonly %"new::Tuple76") #7

unreachable

L153: ; preds = %L91

; └└

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

4 within `inner_noalloc!`

; ┌ @ array.jl:930 within `getindex` @ essentials.jl:917

%63 = add i64 %56, %19

%64 = getelementptr inbounds double, ptr %20, i64 %63

%65 = load double, ptr %64, align 8

; └

; ┌ @ float.jl:491 within `+`

%66 = fadd double %60, %65

; └

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

5 within `inner_noalloc!`

; ┌ @ array.jl:994 within `setindex!`

%67 = add i64 %56, %23

%68 = getelementptr inbounds double, ptr %22, i64 %67

store double %66, ptr %68, align 8

; └

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

6 within `inner_noalloc!`

; ┌ @ range.jl:908 within `iterate`

; │┌ @ promotion.jl:639 within `==`

%.not.not = icmp eq i64 %value_phi2, 100

; │└

%69 = add nuw nsw i64 %value_phi2, 1

; └

br i1 %.not.not, label %L178, label %L4

L178: ; preds = %L153

; ┌ @ range.jl:908 within `iterate`

; │┌ @ promotion.jl:639 within `==`

%.not.not134 = icmp eq i64 %value_phi, 100

; │└

%70 = add nuw nsw i64 %value_phi, 1

; └

%indvar.next = add i64 %indvar, 1

br i1 %.not.not134, label %L189, label %L2

L189: ; preds = %L178

%jl_nothing = load ptr, ptr @jl_nothing, align 8

ret ptr %jl_nothing

}

Notice that this getelementptr inbounds stuff is bounds checking. Julia, like all other high level languages, enables bounds checking by default in order to not allow the user to index outside of an array. Indexing outside of an array is dangerous: it can quite easily segfault your system if you change some memory that is unknown beyond your actual array. Thus Julia throws an error:

A[101,1]

ERROR: BoundsError: attempt to access 100×100 Matrix{Float64} at index [101, 1]

In tight inner loops, we can remove this bounds checking process using the @inbounds macro:

function inner_noalloc_ib!(C,A,B) @inbounds for j in 1:100, i in 1:100 val = A[i,j] + B[i,j] C[i,j] = val[1] end end @btime inner_noalloc!(C,A,B)

3.153 μs (0 allocations: 0 bytes)

@btime inner_noalloc_ib!(C,A,B)

2.361 μs (0 allocations: 0 bytes)

SIMD

Now let's inspect the LLVM IR again:

@code_llvm inner_noalloc_ib!(C,A,B)

; Function Signature: inner_noalloc_ib!(Array{Float64, 2}, Array{Float64, 2

}, Array{Float64, 2})

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

2 within `inner_noalloc_ib!`

define nonnull ptr @"japi1_inner_noalloc_ib!_26259"(ptr %"function::Core.Fu

nction", ptr noalias nocapture noundef readonly %"args::Any[]", i32 %"nargs

::UInt32") #0 {

top:

%stackargs = alloca ptr, align 8

store volatile ptr %"args::Any[]", ptr %stackargs, align 8

%0 = load ptr, ptr %"args::Any[]", align 8

%1 = getelementptr inbounds ptr, ptr %"args::Any[]", i64 1

%2 = load ptr, ptr %1, align 8

%3 = getelementptr inbounds ptr, ptr %"args::Any[]", i64 2

%4 = load ptr, ptr %3, align 8

%5 = getelementptr inbounds i8, ptr %2, i64 16

%.size14.sroa.0.0.copyload = load i64, ptr %5, align 8

%6 = load ptr, ptr %2, align 8

%7 = getelementptr inbounds i8, ptr %4, i64 16

%.size51.sroa.0.0.copyload = load i64, ptr %7, align 8

%8 = load ptr, ptr %4, align 8

%9 = load ptr, ptr %0, align 8

%10 = getelementptr inbounds i8, ptr %0, i64 16

%.size96.sroa.0.0.copyload = load i64, ptr %10, align 8

%11 = shl i64 %.size96.sroa.0.0.copyload, 3

%12 = shl i64 %.size14.sroa.0.0.copyload, 3

%13 = shl i64 %.size51.sroa.0.0.copyload, 3

br label %L2

L2: ; preds = %L178, %top

%indvar = phi i64 [ %indvar.next, %L178 ], [ 0, %top ]

%value_phi = phi i64 [ %696, %L178 ], [ 1, %top ]

%14 = add nsw i64 %value_phi, -1

%15 = mul i64 %.size14.sroa.0.0.copyload, %14

%16 = mul i64 %.size51.sroa.0.0.copyload, %14

%17 = mul i64 %.size96.sroa.0.0.copyload, %14

%18 = mul i64 %13, %indvar

%19 = add i64 %18, 800

%uglygep133 = getelementptr i8, ptr %8, i64 %19

%uglygep132 = getelementptr i8, ptr %8, i64 %18

%20 = mul i64 %12, %indvar

%21 = add i64 %20, 800

%uglygep131 = getelementptr i8, ptr %6, i64 %21

%uglygep130 = getelementptr i8, ptr %6, i64 %20

%22 = mul i64 %11, %indvar

%23 = add i64 %22, 800

%uglygep129 = getelementptr i8, ptr %9, i64 %23

%uglygep = getelementptr i8, ptr %9, i64 %22

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

3 within `inner_noalloc_ib!`

%bound0 = icmp ult ptr %uglygep, %uglygep131

%bound1 = icmp ult ptr %uglygep130, %uglygep129

%found.conflict = and i1 %bound0, %bound1

%bound0134 = icmp ult ptr %uglygep, %uglygep133

%bound1135 = icmp ult ptr %uglygep132, %uglygep129

%found.conflict136 = and i1 %bound0134, %bound1135

%conflict.rdx = or i1 %found.conflict, %found.conflict136

br i1 %conflict.rdx, label %L4, label %vector.body.preheader

vector.body.preheader: ; preds = %L2

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

4 within `inner_noalloc_ib!`

; ┌ @ array.jl:930 within `getindex` @ essentials.jl:917

%24 = getelementptr inbounds double, ptr %6, i64 %15

%wide.load = load <4 x double>, ptr %24, align 8

%25 = getelementptr inbounds double, ptr %8, i64 %16

%wide.load137 = load <4 x double>, ptr %25, align 8

; └

; ┌ @ float.jl:491 within `+`

%26 = fadd <4 x double> %wide.load, %wide.load137

; └

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

5 within `inner_noalloc_ib!`

; ┌ @ array.jl:994 within `setindex!`

%27 = getelementptr inbounds double, ptr %9, i64 %17

store <4 x double> %26, ptr %27, align 8

; └

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

4 within `inner_noalloc_ib!`

; ┌ @ array.jl:930 within `getindex` @ essentials.jl:917

%28 = add i64 %15, 4

%29 = getelementptr inbounds double, ptr %6, i64 %28

%wide.load.1 = load <4 x double>, ptr %29, align 8

%30 = add i64 %16, 4

%31 = getelementptr inbounds double, ptr %8, i64 %30

%wide.load137.1 = load <4 x double>, ptr %31, align 8

; └

; ┌ @ float.jl:491 within `+`

%32 = fadd <4 x double> %wide.load.1, %wide.load137.1

; └

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

5 within `inner_noalloc_ib!`

; ┌ @ array.jl:994 within `setindex!`

%33 = add i64 %17, 4

%34 = getelementptr inbounds double, ptr %9, i64 %33

store <4 x double> %32, ptr %34, align 8

; └

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

4 within `inner_noalloc_ib!`

; ┌ @ array.jl:930 within `getindex` @ essentials.jl:917

%35 = add i64 %15, 8

%36 = getelementptr inbounds double, ptr %6, i64 %35

%wide.load.2 = load <4 x double>, ptr %36, align 8

%37 = add i64 %16, 8

%38 = getelementptr inbounds double, ptr %8, i64 %37

%wide.load137.2 = load <4 x double>, ptr %38, align 8

; └

; ┌ @ float.jl:491 within `+`

%39 = fadd <4 x double> %wide.load.2, %wide.load137.2

; └

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

5 within `inner_noalloc_ib!`

; ┌ @ array.jl:994 within `setindex!`

%40 = add i64 %17, 8

%41 = getelementptr inbounds double, ptr %9, i64 %40

store <4 x double> %39, ptr %41, align 8

; └

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

4 within `inner_noalloc_ib!`

; ┌ @ array.jl:930 within `getindex` @ essentials.jl:917

%42 = add i64 %15, 12

%43 = getelementptr inbounds double, ptr %6, i64 %42

%wide.load.3 = load <4 x double>, ptr %43, align 8

%44 = add i64 %16, 12

%45 = getelementptr inbounds double, ptr %8, i64 %44

%wide.load137.3 = load <4 x double>, ptr %45, align 8

; └

; ┌ @ float.jl:491 within `+`

%46 = fadd <4 x double> %wide.load.3, %wide.load137.3

; └

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

5 within `inner_noalloc_ib!`

; ┌ @ array.jl:994 within `setindex!`

%47 = add i64 %17, 12

%48 = getelementptr inbounds double, ptr %9, i64 %47

store <4 x double> %46, ptr %48, align 8

; └

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

4 within `inner_noalloc_ib!`

; ┌ @ array.jl:930 within `getindex` @ essentials.jl:917

%49 = add i64 %15, 16

%50 = getelementptr inbounds double, ptr %6, i64 %49

%wide.load.4 = load <4 x double>, ptr %50, align 8

%51 = add i64 %16, 16

%52 = getelementptr inbounds double, ptr %8, i64 %51

%wide.load137.4 = load <4 x double>, ptr %52, align 8

; └

; ┌ @ float.jl:491 within `+`

%53 = fadd <4 x double> %wide.load.4, %wide.load137.4

; └

; @ /home/runner/work/SciMLBook/SciMLBook/_weave/lecture02/optimizing.jmd:

5 within `inner_noalloc_ib!`